Hi guys and gals, I am struggling to figure out how to achieve what seems to be a simple control circuit. I’ve wired a test rig and the system works as expected apart from the lamp not illuminating! What I am trying to achieve is a 13amp socket controlled contactor. Part of the control circuit will be a 230v 15watt warning lamp, once the lamp blows or is removed the contactor will drop thus de-energising the 13amp socket. When tested the lamp glows ever so slightly so obviously there’s an issue with such a high resistance of the contactor coil! Is there a different type of coil available on the market with a lower resistance? Is there another method that I’m not thinking of? Advice would be much appreciated. Thanks in advance.

-

Pro's OnlyElectricians Arms Electrician Talk How to Access The Arms Domestic Electrician Industrial Electricians Wiring, Theories, Regulations Engineering Chat Periodic Testing Problems Electricians Downloads Commercial Electricians Security (Access-Only) Access Private Area Business Related Advice Certification Schemes Electrical & PAT Testing

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Thread starter Nick Moore

- Start date

I totally agree with you. Part of the stipulation was that if the lamp does fail the socket must de-energise. I thought that maybe the LED control gear might keep the circuit closed even if the diode fails?Why not use an LED indicator, it's far less likely to fail.

Hi guys and gals, I am struggling to figure out how to achieve what seems to be a simple control circuit. I’ve wired a test rig and the system works as expected apart from the lamp not illuminating! What I am trying to achieve is a 13amp socket controlled contactor. Part of the control circuit will be a 230v 15watt warning lamp, once the lamp blows or is removed the contactor will drop thus de-energising the 13amp socket. When tested the lamp glows ever so slightly so obviously there’s an issue with such a high resistance of the contactor coil! Is there a different type of coil available on the market with a lower resistance? Is there another method that I’m not thinking of? Advice would be much appreciated. Thanks in advance.

You have lamp and contactor coil in series.....you have a dim lamp......what do you think is happening!

Last edited:

I know exactly what’s is happening, I’ve mentioned the very high resistance of the coil/ acting as a resistor. I’m looking for a soloutionYou have lamp and contactor coil in series.....you have a dim lamp......what do you think is happening!

If the failure of the lamp is that critical, I think you need to be looking at proper safety systems.

What's the overall purpose of this setup?

What's the overall purpose of this setup?

To comply with legislation in regard to portable x-ray equipmentIf the failure of the lamp is that critical, I think you need to be looking at proper safety systems.

What's the overall purpose of this setup?

are you designing this or think about it ,cause their will be lives a steak hear with radation.To comply with legislation in regard to portable x-ray equipment

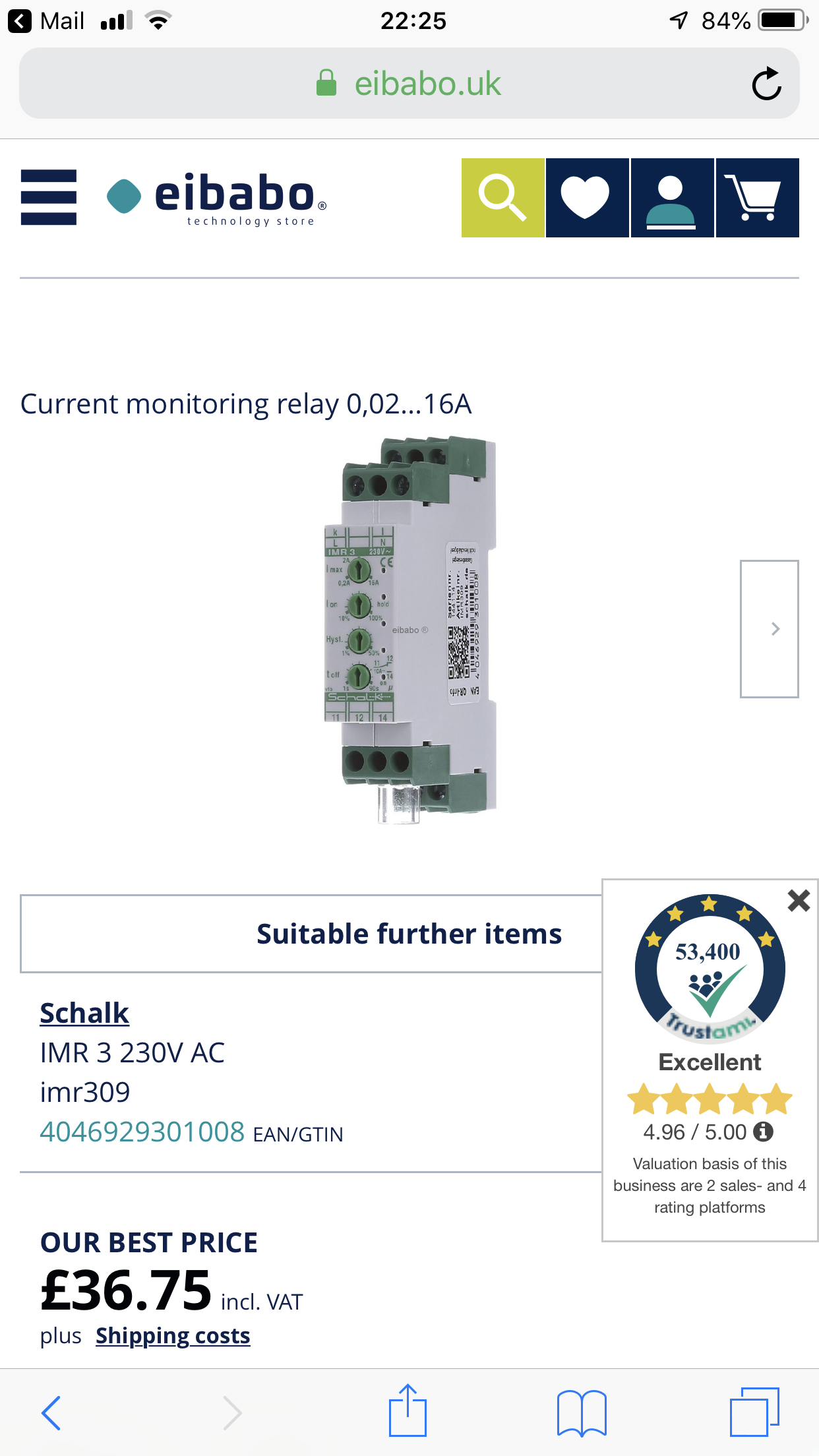

@Andy-1960 beat me to the punch, I'd be using a current sensing relay. Have its control contacts cut the contactor if your indicator lamp burns out.

If you need to run a lamp in series with the coil, you'll need to very carefully balance the current & voltage ratings to give reliable operation, a non-trivial challenge without detailed specs.

If you need to run a lamp in series with the coil, you'll need to very carefully balance the current & voltage ratings to give reliable operation, a non-trivial challenge without detailed specs.

These machines are used by trained professionals. We’re are simply talking about a power supply, a contactor and a warning light. Once energised these mobile machines have to be activated by competent persons.are you designing this or think about it ,cause their will be lives a steak hear with radation.

Yes buddy this would be as close as I have got so far.@Andy-1960 beat me to the punch, I'd be using a current sensing relay. Have its control contacts cut the contactor if your indicator lamp burns out.

If you need to run a lamp in series with the coil, you'll need to very carefully balance the current & voltage ratings to give reliable operation, a non-trivial challenge without detailed specs.

These machines are used by trained professionals. We’re are simply talking about a power supply, a contactor and a warning light. Once energised these mobile machines have to be activated by competent persons.

I think the point we're trying to make is... if this warning light is the only thing standing between a non-competent person getting an unwanted dose of x-rays and them not, then it needs to be pretty bullet proof in terms of reliability.

I'd be thinking multiple lamps, possibly two sense relays and two contactors to provide some level of fail safe setup.

There are special relays I believe that are designed for use in safety solutions that basically prevent operation if a component fails, but machine safety is a specialised area that I'm not familiar with.

D

Darkwood

Out of interest, what specific regulation are you trying to comply with here?

Off the bat there would seem to be a few cheap solutions as oppose to building a monitoring circuit, an example been redundancy, why not just have 2 lamps and when one fails it is clearly obvious and maintenance will repair it or the user trained to report it.

That aside the current monitoring option seems the best as previously mentioned but my concern is your reliance on a contactor to isolate a power outlet, if this is for safety then by design it needs to be fail safe and have redundancy in the design thus the contactor needs monitoring as well via a safety control circuit... when you are trying to comply to machine code standards or similar standards then what often seems a simple solution can require a expensive and complex system to ensure safe use and operation.

Off the bat there would seem to be a few cheap solutions as oppose to building a monitoring circuit, an example been redundancy, why not just have 2 lamps and when one fails it is clearly obvious and maintenance will repair it or the user trained to report it.

That aside the current monitoring option seems the best as previously mentioned but my concern is your reliance on a contactor to isolate a power outlet, if this is for safety then by design it needs to be fail safe and have redundancy in the design thus the contactor needs monitoring as well via a safety control circuit... when you are trying to comply to machine code standards or similar standards then what often seems a simple solution can require a expensive and complex system to ensure safe use and operation.

I appreciate everybody’s reply’s and concerns. They’re all valid in their own right. Thank you.

Is this a first installtion of it's type?

I would have thought that hundreds of these machines would be in use and if it's goverened by legislation there would be an approved and tested method of providing the socket supply.

There must be a similar system in Hospitals with the Danger Xray in use sign above the door, presumably if that indicator fails then the equipment must be disabled.

As above somewhere a backup indicator would seem the simplest choice with a warning notice.

I would have thought that hundreds of these machines would be in use and if it's goverened by legislation there would be an approved and tested method of providing the socket supply.

There must be a similar system in Hospitals with the Danger Xray in use sign above the door, presumably if that indicator fails then the equipment must be disabled.

As above somewhere a backup indicator would seem the simplest choice with a warning notice.

I appreciate everybody’s reply’s and concerns. They’re all valid in their own right. Thank you.

New HSE regulations for x-ray machines in vetinary surgery’s.Out of interest, what specific regulation are you trying to comply with here?

Off the bat there would seem to be a few cheap solutions as oppose to building a monitoring circuit, an example been redundancy, why not just have 2 lamps and when one fails it is clearly obvious and maintenance will repair it or the user trained to report it.

That aside the current monitoring option seems the best as previously mentioned but my concern is your reliance on a contactor to isolate a power outlet, if this is for safety then by design it needs to be fail safe and have redundancy in the design thus the contactor needs monitoring as well via a safety control circuit... when you are trying to comply to machine code standards or similar standards then what often seems a simple solution can require a expensive and complex system to ensure safe use and operation.

It’s an old school surgery that just had a red lamp in a batten holder. The X-ray machine just on a 13amp standard plugtop. Warning notices present.Is this a first installtion of it's type?

I would have thought that hundreds of these machines would be in use and if it's goverened by legislation there would be an approved and tested method of providing the socket supply.

There must be a similar system in Hospitals with the Danger Xray in use sign above the door, presumably if that indicator fails then the equipment must be disabled.

As above somewhere a backup indicator would seem the simplest choice with a warning notice.

when designing those systems they have to to bullet proof to make sure that nobody walk in on x ray machine is on . other wise god help them , is ok to stand behind a lead sheild.

I know exactly what’s is happening, I’ve mentioned the very high resistance of the coil/ acting as a resistor. I’m looking for a soloution

You've got a 230v lamp in series with, I'd imagine, a 230v contactor coil. You will have approx 115v across each of them if you are powering them from a single phase supply. That's why your lamp is dim and contactor won't pull in.

In you want them to work in series you would need a lamp and contactor coil rated for around 120v each. If you can't find a contactor of that rating then you could use a 120vac relay to switch the 240vac contactor coil

Yep spot on apart from the contactor does pull in.You've got a 230v lamp in series with, I'd imagine, a 230v contactor coil. You will have approx 115v across each of them if you are powering them from a single phase supply. That's why your lamp is dim and contactor won't pull in.

In you want them to work in series you would need a lamp and contactor coil rated for around 120v each. If you can't find a contactor of that rating then you could use a 120vac relay to switch the 240vac contactor coil

Not quite or you wpYou've got a 230v lamp in series with, I'd imagine, a 230v contactor coil. You will have approx 115v across each of them if you are powering them from a single phase supply. That's why your lamp is dim and contactor won't pull in.

In you want them to work in series you would need a lamp and contactor coil rated for around 120v each. If you can't find a contactor of that rating then you could use a 120vac relay to switch the 240vac contactor coil

Yep spot on apart from the contactor does pull in.

Ok, but if the coil is 240v then even if it does pull in, it's barely doing it and is not designed for that.

Use a 120v relay to switch that contactor (or even better a 120v contactor) and get a 120v lamp.

Use a 120v relay to switch that contactor (or even better a 120v contactor) and get a 120v lamp.

D

Darkwood

I may sound like a bit of a drama queen here but given the risks regarding this set-up then I would suggest that this requires a full risk assessment and a system designed with inbuilt redundancy to ensure it is as fail safe as can be, you will be looking at compliance with the machinery code directives at a guess as the failure of any single part should not introduce a dangerous scenario be it the lamp, the contactor etc etc...

If the lamp fails and someone can simply walk into the room and be exposed to radiation then there is more to this than just ensuring the lamp works, one needs to do a full risk assessment for all scenarios of someone entering the room be it trained staff who recognise the hazards and understand what the light represents or just general members of the public or workforce who may be ignorant of the warnings (cleaner, janitor etc).

I would be looking at having a electromagnetic door system as a secondary safety setup so regardless of a failure of any initial hazard warning systems it is not possible to enter the room when the equipment is in X-Ray mode.

If the lamp fails and someone can simply walk into the room and be exposed to radiation then there is more to this than just ensuring the lamp works, one needs to do a full risk assessment for all scenarios of someone entering the room be it trained staff who recognise the hazards and understand what the light represents or just general members of the public or workforce who may be ignorant of the warnings (cleaner, janitor etc).

I would be looking at having a electromagnetic door system as a secondary safety setup so regardless of a failure of any initial hazard warning systems it is not possible to enter the room when the equipment is in X-Ray mode.

Shoei

-

As others have commented, x-ray machinery regulation goes beyond making an indicator secure. In the EU x-ray kit must comply with Directive 2013/59 Euratom. If it's a medical facility it's covered by CQC regulation 15 which references UK law on ionizing radiation.

Nothing wrong with working on the indicators but be sure that someone else is taking responsibility for the safety of the whole setup. Get it written into your quote and sign-off papers.

Nothing wrong with working on the indicators but be sure that someone else is taking responsibility for the safety of the whole setup. Get it written into your quote and sign-off papers.

Nope. Only if they have the same resistance, which they won't. You can't even guarantee that a 120V lamp and 120V contactor of equal power would work, because an AC contactor impedance and power factor vary with armature position, so the lamp would receive an impulse of more than 120V during pull-in, possibly blowing it, while its resistance would shoot up reducing the voltage available to pull the contactor in. These are not simple resistors that will divide the voltage ratiometrically.You will have approx 115v across each of them

Leaving aside the safety considerations and thinking only about the electrical theory...

To sense a load current, you want to impose a minimum of voltage drop so that the load sees as near the full supply voltage. E.g., with a 230V supply and 230V load, if you allow say 1% voltage drop in the sensing circuit, that must operate on 2.3V maximum. The load will behave like a current-source, not a voltage-source. I.e. it will dictate the current. If the load is only ever going to be a 15W lamp, its current will be 15/230= 0.065A. That makes a 0.065*2.3= 0.13W available to trigger the circuit. A sensitive miniature relay inside a rectifier could detect this and control a contactor.

It's better to do it electronically. If the load will only ever be a 15W lamp, a rectifier in series with the lamp with two zener legs, will generate a constant voltage that can drive an opto-isolator. The opto output can be used to trigger a thyristor to energise the contactor coil. Better is to use a current-transformer, as zenering the output will allow a wide range of loads to be detected. This makes a non-linear detection circuit - it can cope with a very wide range of load powers between the detection threshold and maximum.

Let's look at why a simple resistive series detection circuit, or something like a contactor coil, can't practically be used as a load detector for a range of loads.

If the sensing circuit also has to be able to carry a full 13A load, then you can't realistically allow a 1% volt drop, as the power dissipated would be 13*2.3=30W, so something in there would have to shift as much heat as a soldering iron. Let's make it 0.5V, for 13*0.5=6.5W (ample to power a large contactor). Now, when the load is the 15W lamp it will only have 0.5/13*0.065=0.0025V across it, so it would have to respond to 0.0025*0.5 = 0.00125W i.e. just over a milliwatt, barely enough to light even a high sensitivity LED

So we see that if the sensing device is resistive, it has to deal with a very wide range of power dissipations (the square of the ratio of maximum load current to the detection threshold current). From this it follows that non-linear sensing device is needed such as the electronically sensed CT.

Last edited:

I think the only way to do this is electronically. A transistor circuit would energise your contactor and if your lamp failed it would switch off your transistor. It’s been a very long time since I had to concern myself on exactly what else you’d need. I was trained to fault find and repair boards at component level around 20 years ago but circuit design at this level is for someone else. PLC’s are much more common now than the old transistor circuits I trained with but I assume that’s not an option and is probably overkill for one circuit unless you’ve already got one in the machine that can be reprogrammed to include the lamp.

B

Bobster

Unless you get a safety rated PLC then going down that route is a none starter. PLCs even non safety ones can monitor current drawn from their outputs, and use this feedback to trigger events. (Certain modules and brands can, not all plc output cards, definitely not relay output modules)

Far simpler ways of doing this as has already been stated. Use a current sensing relay, you can get these safety rates also.

Far simpler ways of doing this as has already been stated. Use a current sensing relay, you can get these safety rates also.

Shoei

-

Don't know the regs for vets x-ray machines but simplest solution would be to use a changeover switch with each side driving a lamp, one saying 'safe' the other lamp saying 'not safe' or words to that effect. Any single fault condition is detectable, one light or the other must be on and if both are off it means a lamp has failed and something needs attention.

Superb advice everyone. This particular forum is a great experience for a first time user. I found a German device that senses 0.02amps to 16amps. The 15w lamp will use 0.06amps @230volts so in theory it should work a treat.

There does not seem to be any CT chamber so I gather that terminals k and l would be wired in series with the load of the lamp. L\N line and neutral permanent supply. Any views on this device?

There does not seem to be any CT chamber so I gather that terminals k and l would be wired in series with the load of the lamp. L\N line and neutral permanent supply. Any views on this device?

Last edited:

mrloy99

-

No doubt I'm missing something here but the ones I've done, are switched from the surgeons panel and just come on at the same time as the socket, not on the control circuit. I don't think there is a requirement to provide a fail safe mechanism. A buzzer might be a better warning than a lamp.That would work.

Similar threads

OFFICIAL SPONSORS

These Official Forum Sponsors May Provide Discounts to Regular Forum Members - If you would like to sponsor us then CLICK HERE and post a thread with who you are, and we'll send you some stats etc

Advert

Thread starter

Thread Information

Advert

Thread statistics

Advert

TrueNAS JBOD Storage Server

-

-

-

Understanding TrueNAS JBOD Servers: A Comprehensive Guide

- Started by Dan

- Replies: 8